Next Word predictor using LSTM

Using LSTM, how can we predict next word in a sentence just like how the mobile’s keyboard predict next word.

Step by Step Process

We have a “.txt” extension file. We open this file and put all the sentences into a list.

file_name = '/content/S01E01 Monica Gets A Roommate.txt'

eposide = []

with open(file_name, 'r') as f:

for line in f:

eposide1.append(line.strip())

Do some cleaning of this list

document = [line for line in eposide1 if line.strip() != '']

Output:

['The One Where Monica Gets a New Roommate (The Pilot-The Uncut Version)',

'Written by: Marta Kauffman & David Crane',

'[Scene: Central Perk, Chandler, Joey, Phoebe, and Monica are there.]',

"Monica: There's nothing to tell! He's just some guy I work with!",

"Joey: C'mon, you're going out with the guy! There's gotta be something wrong with him!",

'Chandler: All right Joey, be nice. So does he have a hump? A hump and a hairpiece?',

'Phoebe: Wait, does he eat chalk?',

'(They all stare, bemused.)',

"Phoebe: Just, 'cause, I don't want her to go through what I went through with Carl- oh!",

"Monica: Okay, everybody relax. This is not even a date. It's just two people going out to dinner and- not having sex.",

'Chandler: Sounds like a date to me.']Not removing stop words because we would like to make a proper keyboard that’s why it is important to not forget stopwords like is, the to make a sentence.

Tokenising all the unique words by assigning unique values to each word

from tensorflow.keras.preprocessing.text import Tokenizer

tokenizer = Tokenizer()

tokenizer.fit_on_texts(list(document))Reverifying unique words (this will return a dictionary with keys as words and values as unique value to the respective words). For example “i” and “the” has assign values “1” and “5” respectively. Similarly, “Dinner” word has assign “457” value.

tokenizer.word_index

Output:

{'i': 1,

'you': 2,

'a': 3,

'and': 4,

'the': 5,

'monica': 6,

'to': 7,

'rachel': 8,

'ross': 9,

'chandler': 10,

'it': 11,

......,

'uncut': 443,

'version': 444,

'written': 445,

'marta': 446,

'kauffman': 447,

'crane': 448,

'hairpiece': 449,

'eat': 450,

'chalk': 451,

'bemused': 452,

"'cause": 453,

'carl': 454,

'relax': 455,

'people': 456,

'dinner': 457,

'standing': 458,

.......,

}Representation of the sentences with unique numbers. The first two sentences are ‘The One Where Monica Gets a New Roommate’

‘Written by: Marta Kauffman & David Crane’.

tokenizer.texts_to_sequences(document)Output:

[[5, 83, 125, 6, 298, 3, 174, 441, 5, 442, 5, 443, 444],

[445, 147, 446, 447, 299, 448],

[49, 224, 225, 10, 12, 35, 4, 6, 32, 50],

[6, 77, 226, 7, 175, 148, 17, 84, 62, 1, 126, 33],

[12, 149, 47, 105, 26, 33, 5, 62, 77, 106, 36, 93, 300, 33, 94],

[10, 22, 53, 12, 36, 176, 37, 127, 63, 40, 3, 301, 3, 301, 4, 3, 449],

[35, 107, 127, 63, 450, 451],

[64, 22, 302, 452]

......

]]

{'i': 1,

'you': 2,

'a': 3,

'and': 4,

'the': 5,

'monica': 6,

'to': 7,

'rachel': 8,

'ross': 9,

'chandler': 10,

'it': 11,

......,

'uncut': 443,

'version': 444,

'written': 445,

'marta': 446,

'kauffman': 447,

'crane': 448,

'hairpiece': 449,

'eat': 450,

'chalk': 451,

'bemused': 452,

"'cause": 453,

'carl': 454,

'relax': 455,

'people': 456,

'dinner': 457,

'standing': 458,

.......,

}

Compare the words with thier corresponsing assign value/number. Now every sentence is made up of numbers which represent respective words.

Total no of unique words/ vocabulary

vocabulary = len(tokenizer.word_index)The main structure:

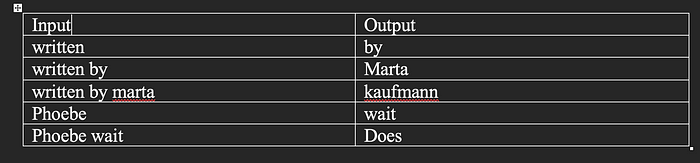

We’re making a supervised ML model with takes input initially as first word, then takes first two word as input then first three words and so on until the sentence completed. Eg.

1st sentence -> 'written by: marta kauffman & david crane'

2nd Sentence --> phoebe: wait, does he eat chalk?'

input_sequence = []

for sent in tokenizer.texts_to_sequences(document):

for i in range(1, len(sent)):

input_sequence.append(sent[:i+1])Output of the above code:

[[5, 83],

[5, 83, 125],

[5, 83, 125, 6],

[5, 83, 125, 6, 298],

[5, 83, 125, 6, 298, 3],

[5, 83, 125, 6, 298, 3, 174],

[5, 83, 125, 6, 298, 3, 174, 441],

[5, 83, 125, 6, 298, 3, 174, 441, 5],

[5, 83, 125, 6, 298, 3, 174, 441, 5, 442],

[5, 83, 125, 6, 298, 3, 174, 441, 5, 442, 5],

[5, 83, 125, 6, 298, 3, 174, 441, 5, 442, 5, 443],

[5, 83, 125, 6, 298, 3, 174, 441, 5, 442, 5, 443, 444],

[445, 147],

[445, 147, 446],

[445, 147, 446, 447],

[445, 147, 446, 447, 299],

[445, 147, 446, 447, 299, 448],

.....

.....

]]The last element in all the lists is the output and remaining as input. However, list does not contain equal no of elements therefore, we need to do padding

1st finding sentence with max. length

max_len = max([len(seq) for seq in input_sequence])

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.preprocessing.sequence import pad_sequences

padded_sent = pad_sequences(input_sequence, maxlen=max_len, padding='pre')

Split padded sentences into X and y

X = padded_sent[:,:-1]

y = padded_sent[:,-1]Output of X:

array([[ 0, 0, 0, ..., 0, 0, 5],

[ 0, 0, 0, ..., 0, 5, 83],

[ 0, 0, 0, ..., 5, 83, 125],

...,

[ 0, 0, 0, ..., 31, 296, 297],

[ 0, 0, 0, ..., 296, 297, 18],

[ 0, 0, 0, ..., 297, 18, 1016]], dtype=int32)Bringing y into vocabulary same dimension

y = to_categoorical(y,num_classes=1018)Training the simple LSTM model

from tensorflow.keras.layers import Embedding, LSTM, Bidirectional, Dense

from tensorflow.keras.models import Sequential

model = Sequential()

model.add(Embedding(input_dim=1018, output_dim=100, input_length=max_len-1))

model.add(LSTM(250))

model.add(Dense(1018, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()Explanation of trainable parameters

1. In embedding layer, each word will be represent as 100 dimension vector. Therefore, output_dim of embedding layer is 100 so that every give word can be represent as 100 dimension vector.

2. This 100 dimension vector will act as input to the LSTM layer. The formula to calculate trainable parameters in LSTM is Total_Parameters=4*(input_dim*units+units*units+units)

3. Dense layer is a simple ANN or FC layer. Trainable parameters are 250*1018+1018

4. model.add(Dense(1018, activation=’softmax’)): This states that there are 1018 neurons/units and out of 1018 return the word with maximum probablity.

Model: "sequential_8"

Layer (type) Output Shape Param #

embedding_7 (Embedding) (None, 159, 100) 101800

lstm_7 (LSTM) (None, 250) 351000

dense_5 (Dense) (None, 1018) 255518

Total params: 708318 (2.70 MB)

Trainable params: 708318 (2.70 MB)Fitting the model

model.fit(X,y,epochs=100)Predicting next 15 words with our model:

import numpy as np

sample_text = "joey"

for i in range(15):

token_text = tokenizer.texts_to_sequences([sample_text])[0]

padded_text = pad_sequences([token_text], maxlen=max_len-1, padding='pre')

pos = np.argmax(model.predict(padded_text))

for key, value in tokenizer.word_index.items():

if value == pos:

sample_text = sample_text + " " + key

print(sample_text)Output:

1/1 [==============================] - 0s 84ms/step

joey i

1/1 [==============================] - 0s 126ms/step

joey i can't

1/1 [==============================] - 0s 96ms/step

joey i can't believe

1/1 [==============================] - 0s 112ms/step

joey i can't believe what

1/1 [==============================] - 0s 111ms/step

joey i can't believe what i'm

1/1 [==============================] - 0s 95ms/step

joey i can't believe what i'm hearing

1/1 [==============================] - 0s 94ms/step

joey i can't believe what i'm hearing here

1/1 [==============================] - 0s 104ms/step

joey i can't believe what i'm hearing here with

1/1 [==============================] - 0s 83ms/step

joey i can't believe what i'm hearing here with my

1/1 [==============================] - 0s 89ms/step

joey i can't believe what i'm hearing here with my entire

1/1 [==============================] - 0s 87ms/step

joey i can't believe what i'm hearing here with my entire life

1/1 [==============================] - 0s 97ms/step

joey i can't believe what i'm hearing here with my entire life she

1/1 [==============================] - 0s 130ms/step

joey i can't believe what i'm hearing here with my entire life she always

1/1 [==============================] - 0s 82ms/step

joey i can't believe what i'm hearing here with my entire life she always drank

1/1 [==============================] - 0s 87ms/step

joey i can't believe what i'm hearing here with my entire life she always drank mesample_text = "It is my"

for i in range(15):

token_text = tokenizer.texts_to_sequences([sample_text])[0]

padded_text = pad_sequences([token_text], maxlen=max_len-1, padding='pre')

pos = np.argmax(model.predict(padded_text))

for key, value in tokenizer.word_index.items():

if value == pos:

sample_text = sample_text + " " + key

print(sample_text)

1/1 [==============================] - 0s 153ms/step

It is my image

1/1 [==============================] - 0s 112ms/step

It is my image she

1/1 [==============================] - 0s 89ms/step

It is my image she walked

1/1 [==============================] - 0s 93ms/step

It is my image she walked out

1/1 [==============================] - 0s 130ms/step

It is my image she walked out on

1/1 [==============================] - 0s 104ms/step

It is my image she walked out on me

1/1 [==============================] - 0s 85ms/step

It is my image she walked out on me i

1/1 [==============================] - 0s 89ms/step

It is my image she walked out on me i uh

1/1 [==============================] - 0s 84ms/step

It is my image she walked out on me i uh i

1/1 [==============================] - 0s 117ms/step

It is my image she walked out on me i uh i am

1/1 [==============================] - 0s 94ms/step

It is my image she walked out on me i uh i am i

1/1 [==============================] - 0s 89ms/step

It is my image she walked out on me i uh i am i am

1/1 [==============================] - 0s 85ms/step

It is my image she walked out on me i uh i am i am totally

1/1 [==============================] - 0s 87ms/step

It is my image she walked out on me i uh i am i am totally naked

1/1 [==============================] - 0s 83ms/step

It is my image she walked out on me i uh i am i am totally naked aCode/Notebook: https://github.com/sambhavm22/Next-Word-Predictor-using-LSTM/blob/main/NextWordPredictor.ipynb