End-to-End NLP Pipeline

In this blog, we will going to learn about basic pipeline of a NLP application.

1. Problem Definition:

- Goal Setting: Clearly define the task (e.g., sentiment analysis, machine translation, chatbot).

2. Data Collection and Annotation:

- Data Collection: Collect raw textual data from sources like APIs, web scraping, logs, or user input.

- Data Annotation: Label the data for supervised tasks (e.g., tagging sentiment or entity types).

3. Text Preprocessing:

- Tokenisation: Splitting text into smaller units like words or subwords (tokens). Example :

"I love NLP!"→["I", "love", "NLP", "!"] - Lowercasing: Converting all text to lowercase for uniformity.

Example:"Text Processing"→"text processing" - Stopword Removal: Removing common words (e.g., “the,” “is”) that do not contribute much meaning. Example:

"This is a book"→"book" - Stemming/Lemmatization: Reduced words to their root form (e.g., “running” → “run”). Example:

"running"→"run"(lemmatisation retains grammatical meaning). - Handling Punctuation/Special Characters: Removing or handling symbols, numbers, and punctuation marks. Example:

"He scored 90%!"→"He scored"

4. Text Representation and Feature Engineering:

- Bag of Words (BOW): Representing text as a frequency distribution of words without considering order. Example:

"cat eats fish"→[1, 1, 1, 0, 0](vocabulary:["cat", "eats", "fish", "dog", "runs"]) - TF-IDF: Adjusting word frequencies by their importance in the entire corpus (Term Frequency-Inverse Document Frequency).

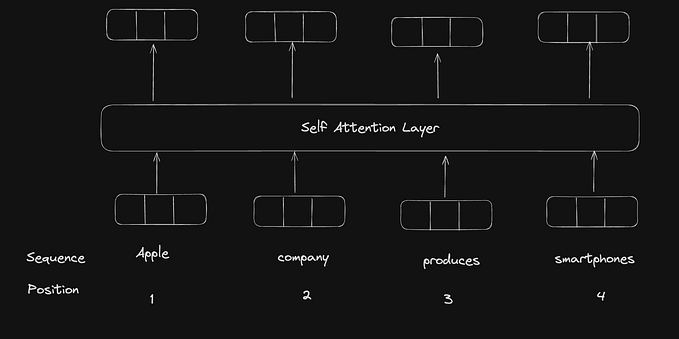

- Word Embeddings: Map words to dense vector spaces capturing semantic meaning. Examples: Word2Vec, GloVe, FastText, or contextual embeddings like BERT.

Extract meaningful features that enhance model performance.

- N-grams: Considering word sequences of size N to capture context (e.g., bigrams, trigrams). Example:

"data science"→ Bigrams:["data science"] - Part of Speech (POS) Tagging: Assign grammatical categories to words (noun, verb, etc.) of words in the text. Example:

"NLP is fun"→[Noun, Verb, Adjective] - Named Entity Recognition (NER): Identifying entities like names, organisations, dates, etc., within the text. Example:

"Google was founded in 1998"→["Google" → Organisation, "1998" → Date]

5. Model Selection and Training:

- Classification Models: For tasks like sentiment analysis, spam detection, etc., where text is categorised into predefined labels.

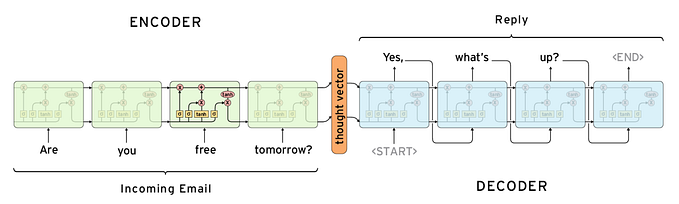

Models: Logistic Regression, Naive Bayes, BERT fine-tuning. - Sequence Models: For tasks like machine translation or text generation, models like LSTMs, GRUs, or Transformers are used to handle sequential data.

- Contextual Models: Using pre trained models like BERT, GPT, to capture contextualised embeddings and apply transfer learning.

6. Evaluation:

- Metrics: Common metrics include accuracy, precision, recall, F1 score for classification tasks, and BLEU, ROUGE for text generation tasks.

- Cross-validation: Ensuring model performance is stable and generalised well on test data by different data splits.

7. Fine-tuning:

- Hyper parameter Tuning: Optimising parameters like learning rate, batch size, and model depth.

- Pre trained Models: Adapt large-scale models like BERT or GPT to task-specific data.

8. Deployment and Integration:

- Model Serialisation: Save the trained model using formats like TensorFlow, PyTorch for compatibility.

- API Integration: Wrap the model into an API using Flask, FastAPI, or Django for use in applications.

- Infrastructure: Deploy the model on cloud platforms or edge devices. like AWS Sage maker, GCP AI Platform, Azure ML.

- Containers: Use Docker and orchestrate with Kubernetes.

9. Monitoring and Maintenance:

- Real-time Monitoring: Track model performance metrics (e.g., latency, accuracy drift).

- Feedback Loop: Use user feedback or new data to retrain and fine-tune the model.

- Dataset Updates: Regularly update datasets to capture evolving language trends (e.g., slang, domain changes).

Conclusion:

The end-to-end NLP pipeline provides a structured framework to tackle a wide range of text-based tasks effectively. By breaking down the workflow into sequential stages — data collection, text cleaning, preprocessing, feature engineering, modelling, evaluation, deployment, and monitoring it ensures the systematic handling of text data from raw input to actionable insights.